| Tweet |

Introduction

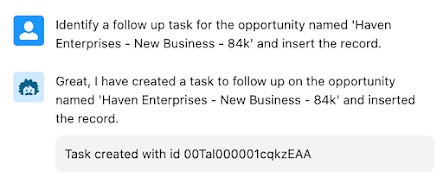

I've been testing out Salesforce's Einstein Copilot assistant for a few weeks now, but typically I've been tacking a single custom action onto the end of one or more standard actions, and usually a Prompt Template where I can easily ground it with some data from a specific record.

Something that hasn't been overly clear to me is how I can link a couple of custom actions and pass information other than record ids between them. The kind of information that would be shown to the user if it was a single action, or the kind of information that the user had to provide in a request.

Scenario

The other aspect I was interested in was some kind of DML. I've seen examples where a record gets created or fields get changed based on a request, but the beta standard actions don't have this capability, so it was clear I'd need to build that myself. So the scenario I came up with was : Given an opportunity, it's existing related activities, and a few rules (like if it's about to close someone should be in contact every day), Copilot suggests a follow up task and inserts it into the Salesforce database.

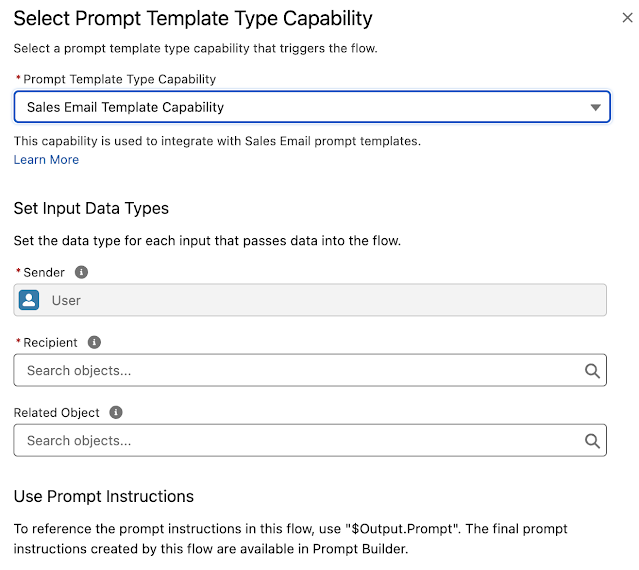

This can't easily (or sensibly, really) be done in a single custom action. I guess I could create a custom Apex action that uses a Prompt Template to get a task recommendation from the LLM and then inserts it, but it seems a bit clunky and isn't overly reusable. What I want here are two custom actions:

- A Prompt Template custom action that gets the suggestion for the task from the LLM

- An Apex custom action that takes the details of the suggestion and uses it to create a Salesforce task.

Implementation

Generate a recommendation for a follow up task in JSON format, including the following details:- The subject of the task with the element label 'subject'- A brief description with the element label 'description' - this should include the names of anyone other than the user responsible for the task who should be involved- The date the task should be completed by, in DD/MM/YYYY format with the element label' due_date'- The record id of the user who is responsible for the task with the element label 'user_id'- {!$Input:Candidate_Opportunity.Id} with the element label 'what_id'

Do not include any supporting text, output JSON only.

public with sharing class CopilotOppFollowUpTask

{

@InvocableMethod(label='Create Task' description='Creates a task')

public static List<String> createTask(List<String> tasksJSON) {

JSONParser parser=JSON.createParser(tasksJSON[0]);

Map<String, String> params=new Map<String, String>();

while (null!=parser.nextToken())

{

if (JSONToken.FIELD_NAME==parser.getCurrentToken())

{

String name=parser.getText();

parser.nextToken();

String value=parser.getText();

System.debug('Name = ' + name + ', Value = ' + value);

params.put(name, value);

}

}

String dateStr=params.get('due_date');

Date dueDate=date.newInstance(Integer.valueOf(dateStr.substring(6,10)),

Integer.valueOf(dateStr.substring(3, 5)),

Integer.valueOf(dateStr.substring(0, 2)));

Task task=new Task(Subject=params.get('subject'),

Description=params.get('description'),

ActivityDate=dueDate,

OwnerId=params.get('user_id'),

WhatId=params.get('what_id'));

insert task;

return new List<String>{'Task created with id ' + task.Id};

}

}

All that's needed to make it available for a Custom Action is the invocable aspect :

@InvocableMethod(label='Create Task' description='Creates a task')

When I define the custom action, it's all about the instruction:

which hopefully is enough for the Copilot reasoning engine to figure out it can use the JSON format output from the task recommendation action. Of course I still need to give Copilot the correct instruction so it understands it needs to chain the actions:

Related Posts

- Einstein Copilot Custom Actions

- Einstein Prompt Templates in Apex - the Sales Coach

- Einstein Prompt Grounding with Apex

- Hands on with Salesforce Copilot

- Hands On with Prompt Builder

- Salesforce Help for Prompt Builder

- Salesforce Admins Ultimate Guide to Prompt Builder